Embeddings, Attention, FNN and Everything You Wanted to Know but Were Afraid to Ask

Introduction

With this article, I’m attempting to start a series dedicated to LLMs (Large Language Models), neural networks, and everything related to AI. The goals of writing these articles are, of course, self-serving, as I myself have relatively recently started diving into these topics and encountered a situation where there’s a wealth of information, articles, and documents written in fine print with complex diagrams and formulas. When reading them, by the time you finish a paragraph, you forget what the previous one was about. Therefore, here I will try to describe the essence of the subject matter at a conceptual level - and thus I promise to avoid mathematical formulas and intricate graphs as much as possible, which inevitably make readers want to close their browser tab and visit the nearest liquor store. So - no formulas (except the simplest ones), no complexity, no pretense to appear smarter than I am. Your grandmother should be able to understand these articles, and if that doesn’t happen - it means I’ve failed at my task.

So, Embeddings

This is a very good word - to appear solid, don’t forget to insert this word in any context when the conversation turns to AI. The chances that someone will ask you to clarify what it means and start asking follow-up questions are minimal, however, your authority in the eyes of others will at least not decrease, and who wants to reveal their ignorance with questions? Nevertheless, considering that you’ll probably have to go to job interviews again soon - let’s try to understand the essence of the phenomenon at a high abstract level.

Let’s take the word “cat”. Let’s take an abstract but trained LLM model. Somewhere in the depths of its matrices-dictionaries, it contains this word. It looks something like this:

| Word | Vector |

|---|---|

| Cat | [0.2, -1.3, 3.4, 5.6…] |

So - what do these vector numbers mean? These numbers are the coordinates of the cat in semantic dimensions.

But how can a soulless machine have the concept of semantic dimension? Correct - it can’t. But they exist. Where did they come from? As a result of training the model on information generated by humanity that the model could reach during the training phase. (For those who need details - Hugging Face Space). But we’ll talk about the training details in future articles.

Let’s imagine that some extraterrestrial being asks you to explain - what is a cat? How would you do it? Obviously - you would try to go through its characteristics:

- Cat? Well, it’s small (relative to what? A virus? A bacteria? A blue whale? We need a measurement scale - let’s say from 0 to 10)

- It’s fluffy (How much? Again, we need a scale. What about a Sphynx?)

- Well, it meows (Frequency characteristics? Spectrum shape?)

- It’s quite intelligent (Again - a scale of intelligence, how do we understand it?)

During training, the model analyzed words that frequently appeared near the word “cat” and calculated its characteristics based on this data. For example, words like “fluffy”, “small”, “catches mice” and others often appeared near the word “cat”. This is exactly how the word’s coordinates are formed in the multidimensional feature space.

In other words, the model doesn’t operate with features (semantic characteristics) in human understanding, but only reveals statistical patterns in the text. For example, dimensions related to size, behavioral characteristics, or degree of intelligence might emerge, even if the latter isn’t literal.

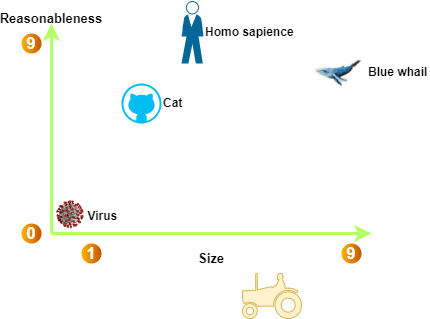

If we simplify the multidimensional space to two dimensions, we can imagine where the coordinates of “cat” would be relative to other entities:

Semantic coordinates of words in the space of animacy and size

So - a cat is quite intelligent and small in size. For comparison, other representatives of our fauna and even a tractor are shown - as a kind of opposite to the semantic meaning of a cat: large and unintelligent. Of course, this is an extreme simplification, because in modern models there are up to 2024 or more such semantic dimensions. That is, there can be such dimensions as:

- Physical characteristics

- Size

- Weight

- Shape

- Color

- Behavioral characteristics

- Intelligence

- Activity

- Danger

- Sociability

- Functional and role features

- Utility

- Domestication

- Emotional coloring

- Temporal affiliation

- …

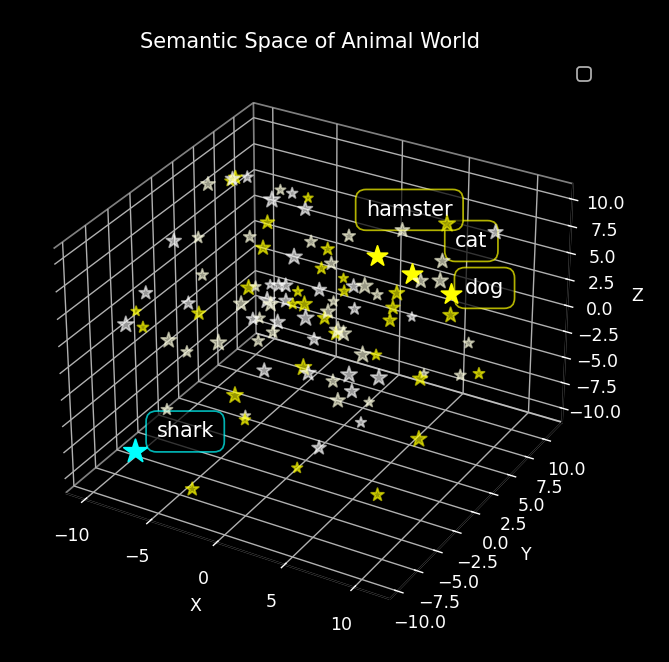

To simplify this representation, imagine a three-dimensional space where each galaxy is a semantic space. In this space, each star represents a word, and its coordinates determine its semantic position. For example, somewhere in this space there will be a star named “cat”. Near it might be stars with names like “dog”, “hamster”, and “mouse”, as these words are semantically close to each other. But “shark” will be further away - while it’s also from the animal kingdom, it’s in the class of cartilaginous fish.

Coordinates in semantic space

And now let’s remember the Multiverse theory (yes, Everett’s interpretation, string theory) and imagine that there are many more than three spatial dimensions - and each dimension defines its own semantic space.

Now that we’ve figured out embeddings, let’s move on to the attention mechanism.

Attention, Attention!

So - we’ve figured out that we have some multidimensional semantic space, but at the level of individual words. But what about homonyms? For example - “I have a new bank”. Is it a financial institution or the edge of a river? We can understand which bank is being referred to from the context. For example - from the previous phrase. If I said before - “I’m going fishing,” - then it becomes clear with a high degree of probability which bank is being discussed. Although the probability isn’t 100%.

How can the model understand a sentence and its meaning? Especially if we’re talking about a generative model - something like:

Prompt: “Write a story about how my learned cat said that he…"

How does the model understand what I want from it? How does it understand the context of the entire text? So - let’s describe the essence of the attention mechanism from the problem statement. As we saw in the example above - we need to understand the relationship between words in the source text (let’s simplify it to one sentence for now). For example - that “learned” is a characteristic of the cat, or “he” - again refers to the cat. Of course, we can’t determine all such connections with 100% certainty, but at least with high probability.

Let’s imagine that I’m a detective investigating the poisoning of a wealthy person. Naturally, I determine the circle of suspects - who could have done it and why. I have a certain database (let’s call it “Query”) where I can look up information about each suspect. I also have another database (“Key”) with detailed information about everyone who was near the victim that day. And I have a third database (“Value”) with specific details about each person’s actions.

And database #2 (“Key”) is like a card file with a detailed description of all words from the model’s vocabulary. And we compare the query obtained in step 1 with the results of step 2. Thus, we determine the significance (weight) of each word in the source text for the given one.

But there’s also database #3 - (“Value”). And it contains the actual data that we will use to update the representation of the word “cat” taking into account the context.

I’ll also add - that we have several sets of such “databases” (“Attention heads”), for example 12 - and each focuses on certain semantic contexts.

And once again: Step one: we asked the question “What do we know about the cat?” (we asked the “Query” database - passing the vector of the word “cat”, and received a new vector with cat features). That is, we refined the query.

Step two: We made queries with each other word from the text in database #2 (“Key”). We checked the results of step 1 and the results of these queries: “Which words in the text can help clarify what kind of cat?” And we found out that these are “learned” and “said”.

Step three: So, when we calculated that “cat” is connected with the words “learned” and “said”, we look at what information these words store in database #3 (“Value”).

For example: “Learned” in Value carries features: “intelligence, knowledge”. “Said” in Value carries features: “speech, communication”. “My” in Value carries features: “belonging, indication of owner”.

Now we weigh these features, taking into account how well they correspond to the Query (cat). If the model knows about talking cats from fairy tales, then “said” will get a high weight. And if the model isn’t familiar with fairy tales, it might consider that “said” isn’t important at all (the model hasn’t heard of talking cats). And then - the model tries to generate a continuation, and since it’s trained on fairy tales - it will take something from there. What did talking cats most often say in stories?

So the model will continue our original text something like this:

Prompt: Write a story about how my learned cat said that he…

Model’s response - “had seen much and knows much”

Attention mechanism in action